Nirav Rana, Development Manager, Azure Architect and AI Engineering Expert, designs scalable cost-effective software solutions for multiple sectors

By Ellen F. Warren

Nirav Rana is a Development Manager for Ernst & Young, a “Big Four” accounting firm, where he leads multiple development teams working on the company’s cutting-edge human resources technology platform. Over the past two decades, he has worked as a Development Manager, Lead Engineer, and Software Architect for some of the world’s most prominent companies, with industry specialisations in tax services, information technology (IT) services, and retail.

As a development manager with a strong record of driving innovation and leading large scale technology projects, Nirav has significant expertise in team and people management and strategic planning. He is highly regarded for his skill and experience in leveraging advanced technologies including Microsoft Azure, cloud-based architecture (Azure), AI technologies, OpenAI, and security focused solutions to enhance business processes and expedite development.

Nirav is Microsoft certified as an Azure Solution Architect Expert and Azure AI Engineer.

He earned his Bachelor of Engineering degree in Electronics Communication from the Nirma Institute of Technology in Ahmedabad, India, and received his Master’s degree in Computer Engineering from the University of North Texas in Denton, Texas (US).

We spoke with Nirav about the diverse ways in which he is applying emerging technologies to achieve improved outcomes and process efficiencies, ranging from human resources to tax compliance and other recent projects.

Q: Nirav, prior to your current role as a Development Manager for Ernst & Young, you were a technology leader and solution architect for global organisations such as Wolters Kluwer, Modis Inc , and Infosys, among others. Your career has also run in parallel with the introduction and rise of the Microsoft Azure cloud computing platform, in which you have singular expertise. As an early adopter who has leveraged Azure across numerous and diverse use cases over the past 15 years, how did cloud platforms change the way you approach software engineering and solution design?

A: Cloud platforms like Microsoft Azure have completely transformed how software engineering and solution design are approached. Traditionally, software projects involved significant time and investment in physical infrastructure, often resulting in delays and inefficiencies. Azure introduced the ability to rapidly provision resources on demand, allowing developers to focus on creating innovative solutions rather than managing infrastructure challenges.

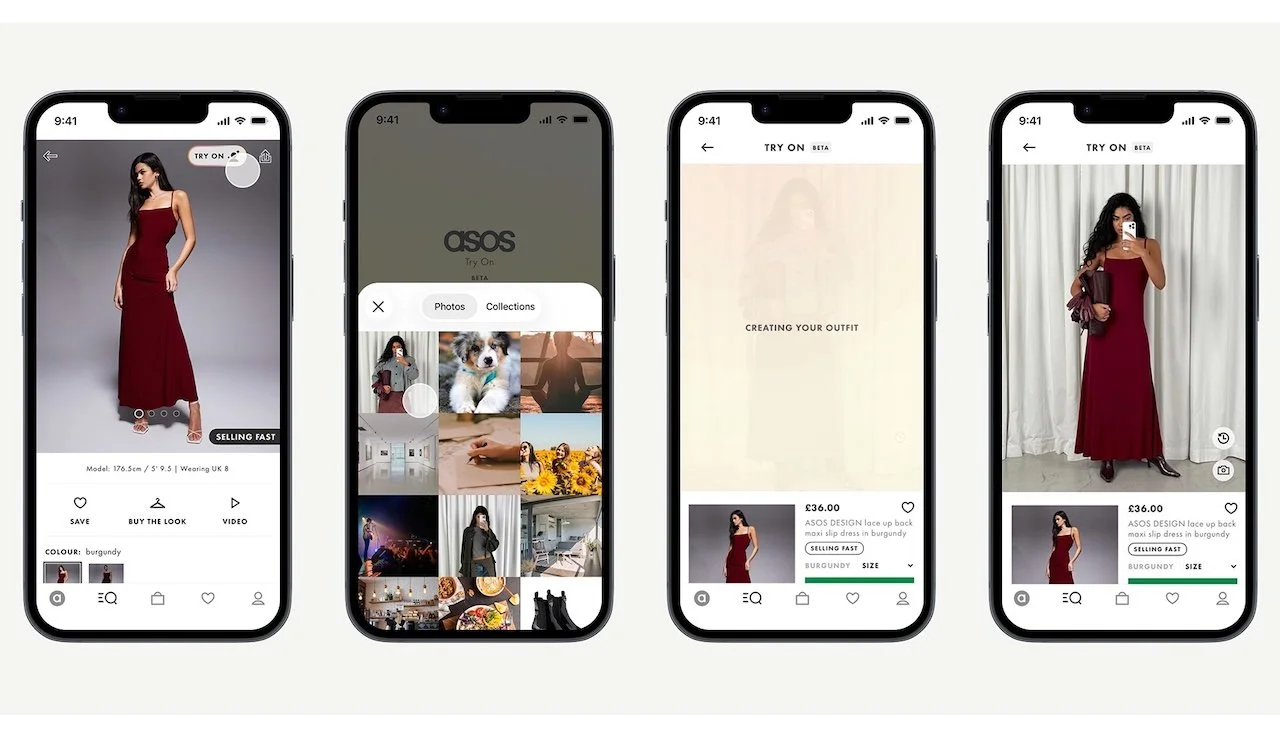

In my role, I’ve leveraged Azure’s extensive suite of services to design scalable, secure, and cost-effective solutions for industries such as tax compliance, Information Technology (IT) services, and retail. My experience with the Microsoft mPoS Retail Solution stands out as a prime example.

mPoS is the Modern Point of Sale (PoS) application that enables retail salespeople to use mobile devices anywhere in a store, and PC-based registers, to process customer orders and sales, and manage inventory and daily operations, as well as other functionalities. I successfully led the implementation of Microsoft’s D365 Finance and Operations and Retail E-commerce/mPoS project, utilising Azure to ensure scalability and reliability.

Currently, I lead the development team, using Azure features such as Azure App insights, Logic Apps, Azure Functions, and Service Bus to create scalable and reliable tax solutions. Additionally, I’ve pioneered innovations such as integrating GitHub Copilot and Azure OpenAI services to expedite development processes and implement generative AI solutions.

Additionally, Azure’s integration with Kubernetes has enabled me to deploy containerised applications that scale seamlessly based on demand, optimising resource usage while reducing costs. Tools like Azure DevOps have enhanced team collaboration, enabling continuous integration and delivery pipelines that streamline development workflows.

Azure’s robust AI and machine learning capabilities have also allowed me to integrate automation into projects, improving decision-making and reducing manual workloads. By adopting cloud-native architecture, I’ve ensured high availability and fault tolerance for applications, critical for businesses relying on uninterrupted services. Overall, Azure’s innovative cloud offering has enabled me to deliver transformative solutions that align with evolving business needs and technological advancements.

Q: System optimisation has been a consistent objective for you across the various industries you have worked in throughout your career. You have also been at the forefront of applying emerging technologies such as artificial intelligence (AI) in your design and development projects. How are you using AI to enhance system and process optimisation? Are there common denominators in your designs for different sectors, or is each iteration unique?

A: Creating flexible and scalable systems is fundamental to building modern applications that can adapt to changing needs and future growth. A well thought out system design ensures efficiency, reliability, and the ability to integrate emerging technologies seamlessly.

AI has become a transformative tool in this regard, especially with the rise of generative AI, which has brought new opportunities to improve systems and streamline processes. I have created proof of concepts using AI for tasks like organising customer data with past patterns, summarising documents, and building AI agents to simplify application workflows. These concepts showcase how AI can make systems faster and more efficient.

Whether an AI solution works for different industries depends on the task. For example, a tool like a text-to-SQL agent, which lets you ask database questions in plain language, can work in any field with some tweaks to the database. On the other hand, solutions like document automation, which fill out specific forms, need more customisation because forms vary by industry. While the core technology can handle basic tasks, it must be adapted to fit unique needs.

Q: Earlier in your career you were focused on using AI to improve the healthcare experience. What can you tell us about that innovation? How can engineers deploy emerging technologies to produce better patient outcomes and experiences in healthcare settings?

A: AI can significantly transform healthcare by improving the experiences of both patients and health workers. One key application is streamlining appointment scheduling, which reduces patient wait times and optimises clinic workflows. AI powered tools like document intelligence and optical character recognition (OCR) can automate the completion of patient forms, minimising manual entry and improving accuracy. This not only enhances the patient intake process, but also frees up healthcare workers to focus on critical tasks.

Natural language processing (NLP) allows healthcare providers to quickly update records and retrieve patient information using simple voice commands or brief text inputs. This capability saves time, reduces administrative burdens, and ensures critical data is readily accessible during patient consultations.

Additionally, wearable devices equipped with AI can continuously monitor patient vitals and transmit data to healthcare providers in real-time. For example, an AI system analysing a diabetic patient’s wearable device data could detect a dangerous spike in blood sugar levels and instantly notify the doctor. The doctor can then contact the patient with tailored instructions to prevent complications.

Furthermore, AI can analyse large datasets to predict disease outbreaks, personalise treatment plans, and identify high risk patients for early intervention. These innovations collectively enhance patient care, improve outcomes, and drive efficiency in the healthcare ecosystem.

Q: As an example of a different use case, you recently wrote about leveraging AI for effective indirect tax compliance. Can you briefly describe the challenges associated with tax compliance, and how you designed an AI solution to streamline and improve tax compliance processes?

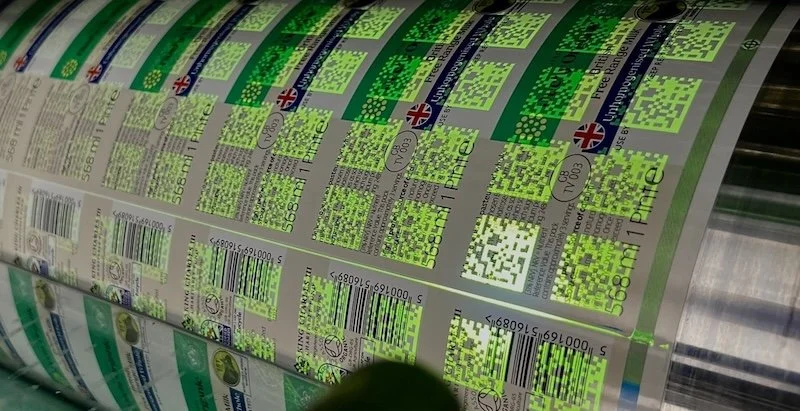

A: Indirect tax compliance is one of the most complex areas for organisations, particularly in the United States, which has over 10,000 tax jurisdictions. Each jurisdiction has its own tax rates, rules, exemptions, filing formats, deadlines, and reporting requirements, creating significant challenges. Organisations must navigate discrepancies in definitions of taxable goods and services, varying exemption certificates, and frequent changes to regulations.

Manually tracking these updates across jurisdictions is time-intensive and prone to errors, leading to potential non-compliance risks such as penalties, audits, and reputational damage. AI can effectively address these challenges. Robotic Process Automation (RPA) can scrape jurisdiction websites for updates, while Generative AI can summarise rule changes, enabling tax analysts to quickly assess impacts. Document Intelligence further streamlines filing by automating tax document preparation and submission, ensuring compliance across jurisdictions.

Q: Two innovative features of your indirect tax solution design involved using AI in conjunction with blockchain technology, and integrating predictive analytics. How do you leverage blockchain in this context? And what benefits do you derive from AI’s predictive analytics capabilities?

A: One of the key advantages of blockchain is its ability to provide transparency by recording transactions across all nodes in the network. This ensures an immutable and verifiable ledger, enhancing trust and accountability.

In the context of indirect tax solutions, blockchain can be leveraged to execute smart contracts triggered by predefined conditions, such as tax thresholds or compliance deadlines. This automation reduces the risk of human error, ensures timely compliance, and streamlines processes such as tax remittance and refund management.

AI’s predictive analytics capabilities complement this by utilising historical and real-time data to forecast future tax liabilities and cash flow requirements. By identifying patterns and trends, predictive analytics enables organisations to anticipate potential tax obligations, avoid cash flow shortages, and optimise financial planning.

For example, an organisation can proactively allocate resources to meet upcoming tax payments or adjust strategies to minimise penalties and interest on delayed payments. Together, the integration of blockchain and predictive analytics provides a robust, data driven approach to managing indirect taxes, improving operational efficiency, compliance accuracy, and financial stability.

Q: Whether users are implementing AI for healthcare, tax, or any other system or sector, what concerns and risks should be taken into consideration, and how do you mitigate for them? Beyond the cyber risks associated with bad actors, how can organisations protect themselves from system problems like biases and potentially inaccurate data that may influence critical decisions?

A: An AI system should be fair and should not have any inherent biases. It must be transparent to reveal how its results were computed. Most importantly, any AI tool needs to protect user data and maintain privacy. AI systems require regular monitoring to ensure that they are free of biases and maintaining privacy for user data.

Organisations must also be vigilant about the risk of AI hallucination, in which models produce inaccurate or misleading outputs due to poor training data, incorrect assumptions made by the model, or malicious interference by bad actors. To mitigate these risks, organisations should establish robust policies, conduct regular system monitoring, and safeguard data using techniques such as encryption and anonymization.

Equally important is involving stakeholders in the AI process to make its workings more transparent and comprehensible. By fostering a culture of accountability and keeping a close eye on AI systems, organisations can ensure they remain safe, fair, and trustworthy, while minimising potential biases and errors.

Q: Your current focus is a proprietary global workforce management tool that offers a single integrated solution to manage data from multiple sources in a simple, user-friendly interface. As the Development Manager for this platform, you oversee a team of nearly 50 people and must collaborate with other engineering leaders to maintain technical standards and best practices, while continuing to innovate and manage risks.

And this is yet another example of how you utilised Azure, this time with Kubernetes, to optimise the infrastructure and create scalability and cost-effectiveness. What can you tell us about the evolution of this tool and how it improves efficiency? What makes it unique?

A: In traditional hosting environments, there were often deployment issues due to mismatches in configuration and missing dependent libraries, and resolving these issues involved substantial manual work. However, taking a container-based approach solves these issues, because everything is packaged with one deployable component.

These containers are hosted on Kubernetes, an open source container management program that automates many manual processes. Kubernetes, which was initially developed by Google initially and is now maintained by the Cloud Native Computing Foundation (CNCF), has gained popularity because it provides high scalability, availability, and infrastructure flexibility.

Kubernetes hosts containers as “pods” - the smallest deployable unit of computing that it can use - and excels on horizontal scaling to add or remove a pod based on the load. Kubernetes can also be deployed on virtual machines, and its cloud offering or physical server provide flexibility on hosting.

In our application, Kubernetes is deployed on Microsoft Azure, leveraging Azure Kubernetes Service (AKS). This allows us to benefit from Azure's robust cloud infrastructure, including automated scaling, integrated security features, and seamless integration with other Azure services like Azure Monitor for performance tracking and Azure DevOps for streamlined CI/CD pipelines. Using Azure with Kubernetes further enhances scalability and cost-effectiveness while simplifying operational overhead.

Generally, organisations opt for a cloud-based managed offering that requires minimal management by the internal IT team. Kubernetes provides robust orchestration capabilities, automating tasks such as deployment, scaling, and resource management. Traditional hosting typically requires manual intervention for these tasks, which can be time-consuming and error prone. Kubernetes also provides namespace isolation, network segmentation, RBAC based access to enhance security.

Q: If other organisations were to embark on this type of multi-faceted, large-scale project, what pathway to development would you prescribe? What guidance can you offer for developing technical solutions and architecture and managing the process through the design and implementation stages?

A: Organisations embarking on large scale AI projects should begin with comprehensive planning to identify use cases that deliver maximum value to their customers and the organisation. This requires aligning organisational goals, customer needs, and operational impacts, while engaging stakeholders to ensure shared objectives. Data compliance is a critical concern: adherence to legal standards and robust data governance frameworks are essential to safeguard sensitive information.

Approvals for data sharing must be secured to prevent privacy issues and potential reputational risks. Design discussions should cover technology, deployment, hosting, scalability, and future enhancements, integrating input from cross-functional teams to address challenges and align with long-term strategies. Organisations should adopt a cautious, phased approach to implementation, starting with pilot programmes to validate solutions before scaling.

This minimises risks and ensures benefits are realised. A culture of continuous learning is vital to adapt to AI advancements. Frequent monitoring of AI systems for accuracy, transparency, and fairness is crucial, with regular audits to address biases and enhance performance. Training teams to manage and interpret AI outputs responsibly further ensures ethical use. Through strategic planning, careful execution, and diligent oversight, organisations can effectively handle the complexities of large scale AI projects while maximising their value and impact.

Q: Throughout your career you have supervised, trained, and mentored individuals and teams of all sizes. What strategies and leadership skills do you bring to these roles? How do you motivate innovation and inspire collaboration to produce high performing teams?

A: As an Engineering Manager, I have focused on creating strong, high performing teams by supporting their growth and development. I believe in listening to my team members, understanding their strengths and challenges, and working with them to create personalised plans that help them achieve their goals while meeting the needs of the organisation.

I also guide and mentor team members by sharing my experience and helping them navigate complex challenges. To encourage innovation, I create an environment where team members feel safe to share ideas and experiment without fear of failure. I also promote collaboration by fostering open communication, encouraging teamwork across different groups, and keeping everyone aligned with clear goals and a shared vision.

Recognising achievements, providing constructive feedback, and empowering team members to take ownership of their work are some of the ways I keep my teams motivated and engaged. These approaches have helped me lead teams that deliver innovative, high quality solutions while maintaining a strong sense of teamwork and satisfaction.

Continue reading…