Data engineering expert Sanjay Puthenpariyarath designs innovative tech solutions to improve organisational outcomes

By Ellen F. Warren

Sanjay Puthenpariyarath leads complex technology transformation projects for Fortune 500 companies in various sectors, including banking, telecom, and e-commerce.

With nearly 20 years of data engineering experience specialising in Big Data processing, data pipeline development, cloud data engineering, data migration, and database performance tuning, he is highly regarded for his expertise in designing and implementing innovative scalable data architecture solutions using cutting-edge technologies.

As a master builder of robust ETL pipelines, data warehouses, and real-time data processing systems, his optimised data workflows have helped organisations achieve transformative operational outcomes, such as reducing processing times and associated costs by up to 50%, saving $1 million in software licensing fees, eliminating $1 million in billing revenue leakage, and automating integrated database applications that have enabled companies to realise nearly $5 million in costs previously incurred for manual labor.

Puthenpariyarath received a Bachelor of Engineering degree in Electronics and Communication Engineering from Anna University, India, and earned a Master of Science degree in Information Technology from the University of Massachusetts, Lowell (US).

We spoke with hime about the challenges confronting global organisations that are relying on legacy technology systems, or trying to transition to integrate new tools, and how he drives technology transformation.

RTIH: Sanjay, much of your work in the last two decades has involved updating technology and processes for global companies. What are the obstacles related to legacy systems that you are helping these organisations overcome?

SP: The key obstacles associated with legacy systems include outdated and overly complex designs, high operational costs, low performance, lack of scalability, security, compliance, portability, and integration with modern tools.

To address these challenges, legacy systems require an architectural overhaul, along with the adoption of modern tools, technologies, and strategies. For example, outdated designs often have redundant workflows that no longer serve any purpose, leading to increased costs and reduced performance.

These redundancies may be eliminated by conducting a thorough gap analysis, refactoring workflows, and creating a simplified design with clear documentation to streamline operations.

Additionally, legacy systems often incur significant operational expenses due to the use of vendor-specific software with high licensing fees, on-premises datacentres, physical security, server maintenance, and ongoing training costs.

A cost-effective solution would be to transition to open source tools and technologies, reducing reliance on expensive proprietary software. Moving to a cloud-based solution can also help reduce on-premises datacentre expenses, and solve issues related to a lack of scalability, such as handling increasingly large volumes of Big Data.

In outdated systems, the existing tools and systems may not be adequate to manage large data sets. Migrating on-premises infrastructure to scalable cloud-based platforms can provide the flexibility needed to scale based on demand.

As data grows exponentially, legacy systems may struggle to keep up, often remaining stagnant. Optimising the system through techniques such as caching, indexing, tuning, and partitioning, along with adopting more efficient storage solutions, can improve processing speed and overall performance.

Furthermore, older systems often lack modern security features, posing significant threats to data integrity and compliance. Implementing encryption methods ensures the safety of Personally Identifiable Information (PII) throughout the data lifecycle. Adherence to compliance regulations, establishing data governance frameworks, and conducting regular audits of secure access controls are essential to maintaining system security.

Finally, legacy systems may be difficult to port to new environments due to their dependency on specific software components or custom logic. To overcome this limitation, dependencies should be minimised by finding alternative solutions, and the logic should be redesigned to make it portable.

Another challenge is incompatibility with modern tools, which can often be solved by building Application Programming Interface (API) layers to facilitate interaction between older systems and newer technologies.

RTIH: How do you design and implement major technology transitions without impacting a company’s operations or customer experience?

SP: Customer experience and company operations should not be negatively impacted if a structured and strategic approach is carefully followed. Comprehensive planning and system assessment are crucial.

A gap analysis is necessary to understand the current systems, processes, workflows, and technologies in comparison to the future system. All stakeholders should be engaged and aligned, with risks identified in advance and mitigated according to a defined plan. It Is also important to establish a robust backup and recovery strategy, thoroughly testing it to prevent customer service interruptions during critical issues.

I advise adopting a phased transition approach, rather than a 'big bang,' to minimise risks. Parallel operations, where both old and new systems run simultaneously, help ensure the same results and improved performance before fully switching over to the new system.

Rigorous testing, including quality assurance (QA), user acceptance (UAT), performance, and load testing, is key to a successful transition. Subject matter experts, business stakeholders, and architects should review the results.

Prepare thorough documentation and diagrams, and provide training on the new tools, technologies, and processes to the employees. Business users must be trained on the upcoming changes. A detailed release plan should also be prepared, with “dress rehearsals” conducted before the actual launch. After implementation, continuous monitoring should be enabled to promptly address any issues.

RTIH: You are using relatively new cloud-based and open source technologies, and APIs, to help businesses migrate from legacy systems and improve operational processes - and you often design innovative new ways to use these tools. Can you give us an example of an effective technology transformation?

SP: I recently led a technology transformation project for a retail giant whose on-premises infrastructure was outdated, and its application struggled to handle the processing of billions of data. We migrated their datacentre to a scalable and secure AWS cloud-based infrastructure, resulting in a 50% reduction in operational costs.

The legacy system relied on relational databases, control files, stored procedures, and SQL loaders. We redesigned the data engineering platform using Apache Spark, Scala, and other open-source technologies, enabling in-memory data processing. This eliminated the need to repeatedly read and write interim data to the database, resulting in a 40% improvement in processing time.

Additionally, the original architecture used a vendor specific middleware for publishing fine-grained data to various target systems, which incurred millions in licensing costs. We replaced this with AWS Lambda functions, saving the company $1 million in licensing fees.

Ultimately, we delivered a high-speed, secure data processing system with significantly reduced costs. The project's success was driven by a careful selection of tools and technologies, in-depth gap analysis, and thorough proof of concept testing.

RTIH: For a large telecommunications technology transformation project, you were recognised for your leadership in selecting technologies, designing data flows, and integrating with existing systems, and the company noted that your expertise in Big Data technologies was crucial to the project’s success. What differentiates your expertise and approach to leading these large-scale projects?

SP: I bring a valuable combination of deep knowledge in Big Data systems, strategic technology selection, and a collaborative, results oriented leadership style. My experience with Big Data technologies enables me to make informed decisions that maximise efficiency and reduce costs.

I specialise in using tools such as Apache Spark for distributed data processing, NoSQL databases for managing unstructured data, and real-time streaming platforms like Kafka for handling high-velocity telecommunications data. This expertise allows me to build robust systems capable of processing trillions of data while maintaining high performance and reliability.

I also emphasize reducing costs associated with data processing infrastructure, licensing fees, and storage, while ensuring that performance is not compromised. My approach focuses on balancing cost, efficiency, and performance when designing solutions. For instance, I eliminated expensive vendor-specific software with high licensing costs, replacing them with more cost-effective alternatives without sacrificing system performance.

Choosing the right technology is critical to the success of any project. I conduct thorough gap analyses and perform proof-of-concept trials to verify the compatibility of tools and technologies with the existing system.

By analysing the current architecture and identifying problem areas, I select technologies that best meet the company's needs. Scalability and cost reduction are key infrastructure goals, which is why I migrated from on-premise systems to AWS cloud services, and utilised Big Data technologies like Apache Spark and Kafka for real-time processing, as well as Hadoop for distributed storage.

In my typical approach, I focus on designing end-to-end data flows that optimize performance, reduce latency, and ensure data integrity across various systems. By eliminating redundant processes and streamlining data pipelines, I simplify system design while maintaining high data quality.

And rather than opting for a "big bang" approach, I prefer incremental rollout, introducing new technologies while maintaining smooth operation of existing systems. Not all legacy systems are compatible with modern tools, so I utilise APIs to facilitate interaction between old and new systems, improving performance without causing disruptions.

To facilitate seamless transformations, I foster a collaborative work culture, ensuring that all stakeholders from developers to executives are aligned on project goals and timelines. My leadership approach emphasises clear communication, empowering teams, fostering innovation, and promoting accountability.

I organise milestone reviews, prioritisation meetings, iterative development cycles, project reporting, and maintain open channels for feedback to ensure project success.

RTIH: You have also made significant contributions to designing new processes for data mining that improve the business insights gained from enhanced analytics and data science. How do these results help organisations thrive in today’s highly competitive marketplace?

SP: Data has become an invaluable asset, and leveraging it to generate meaningful business insights is key to driving company growth and gaining an edge in today’s competitive marketplace.

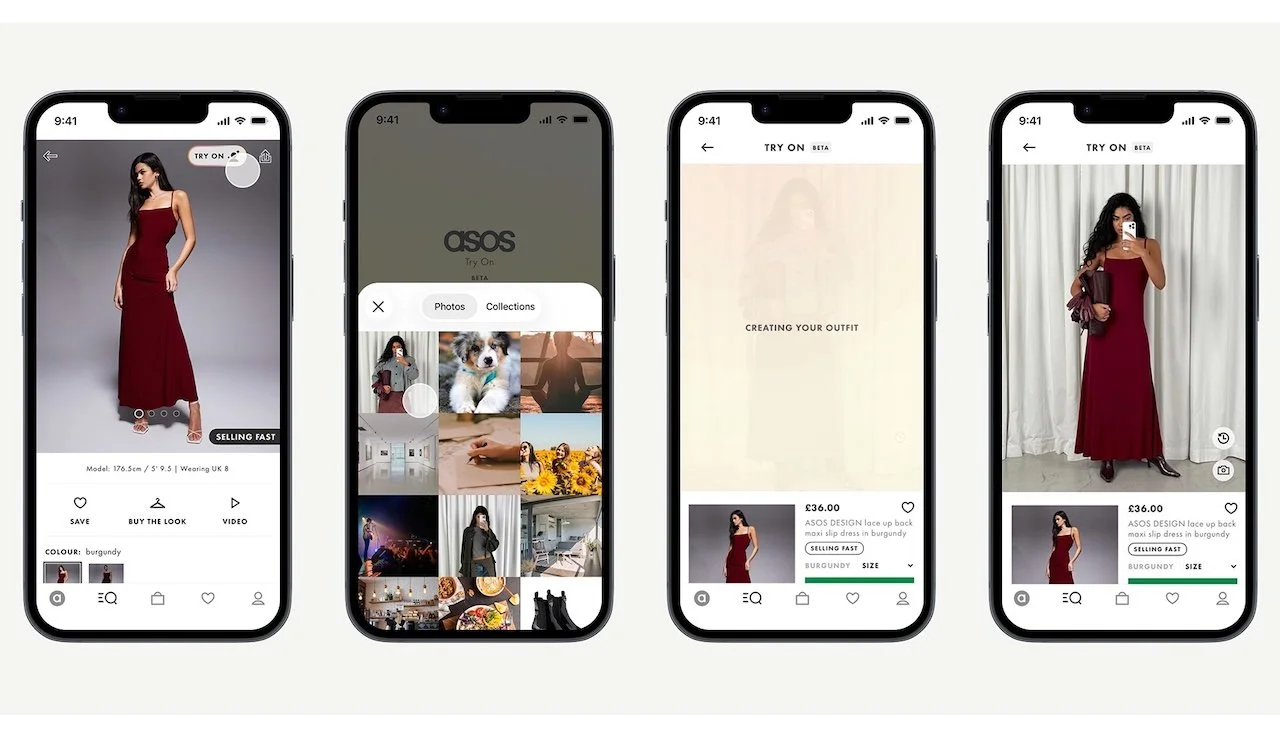

Data mining reveals key customer behaviours and preferences, enabling businesses to enhance customer experiences by customising their products, services, and marketing strategies to meet individual needs. This personalised approach boosts customer satisfaction, which is crucial for growth in highly competitive industries.

Data is now critically used to improve decision-making with real-time insights derived from actual data, not assumptions. This leads to better outcomes in marketing, operations, and customer engagement, ensuring that business strategies are aligned.

Furthermore, by combining data mining with machine learning algorithms, businesses can predict future trends such as customer demand, market shifts, and potential risks. These predictions empower organisations to develop proactive strategies, keeping them ahead of competitors and adaptable to changing market conditions.

For instance, during the Covid-19 pandemic, predictive analysis helped mitigate subscriber churn by launching a "lite" version of the product with essential features at a discounted rate, successfully retaining customers.

Organisations are also using data mining to uncover new business opportunities and potential revenue streams. This could involve optimising pricing strategies or identifying untapped markets. For example, a device protection provider could expand into home device protection, capitalising on a new market segment.

RTIH: Your work on one project, in which you migrated millions of subscriber records to a new platform, was said to set a new industry standard for data migration frameworks, demonstrating the potential of certain cutting-edge technologies in handling large-scale data processing with zero fault tolerance. How did this differ from the traditional data migration model?

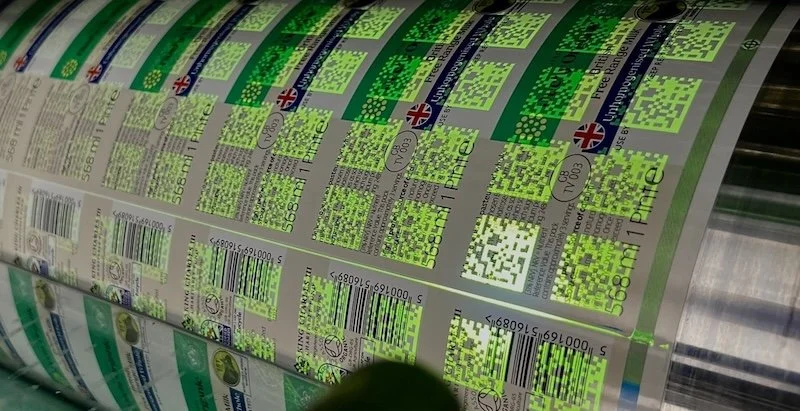

SP: The traditional data migration model for relational databases typically involves data extraction, cleansing, transformation, data quality checks, and loading-all driven by database technologies. This approach often results in frequent read and write operations, leading to performance bottlenecks and increased costs.

Additionally, the application cannot be down for more than 30 minutes due to the risk of financial losses, making the timely completion of the migration a high-stakes challenge.

I built a reusable and scalable data migration framework using AWS Data Pipelines, AWS S3, Apache Spark, Scala, AWS Lambda, and Node.js. This solution enabled ultra-fast data processing and performed fine-grained data transformations in memory.

Instead of relying on data publishing software, we used SNS topics and AWS Lambda to insert data into the target system, greatly improving speed and efficiency. The result was a timely migration, with high data quality and zero tolerance for faults. Additionally, rollback mechanisms, logging, progress tracking, and migration validation were significantly easier and more efficient compared to traditional migration models.

RTIH: How do you keep up with and ahead of Artificial Intelligence (AI) and other new technologies? You have already achieved acclaim for developing a machine learning (ML) pipeline for predictive maintenance that generated significant cost savings by reducing equipment downtime and maintenance costs. How important is predictive analysis? And how else are you adopting AI and ML principles in business operations?

SP: To stay ahead of AI and emerging technologies, continuous learning and adaptation are key. I consistently follow AI research, attend conferences, and read academic papers and blogs to deepen my understanding of the benefits that AI and ML offer.

I actively apply these insights to my projects, producing impactful results. AI and ML are increasingly critical in today’s market, and I am leveraging these technologies to solve complex business problems with notable success.

Reactive approaches are becoming obsolete, as predictive analysis now plays a crucial role in identifying trends and enabling us to prepare proactively. For example, in a large warehouse, equipment downtime severely impacts efficiency and disrupts business operations.

By using predictive analytics, we can anticipate potential failures and take preemptive action, ensuring smooth operations. Another example is the framework I developed to detect and resolve data quality issues in billions of daily customer records.

The models were trained on data patterns, enabling automated fixes, which led to a 100% improvement in data quality across the organisation. Predictive analysis was also used to assess customer behaviour, such as identifying the likelihood of customers purchasing device protection when buying a new device, greatly enhancing decision-making processes.

RTIH: How are your achievements showcasing the potential for modern data engineering practices to drive operational efficiency and innovation across industries? What is your forecast for future technology transformation?

SP: My work demonstrates how modern data engineering can revolutionise operations and drive innovation across industries, by leveraging data to make smarter, faster decisions. I take pride in a critical retail project in which I saved my organisation $475,000.00 by automating the creation and compilation of software components.

In another recent transformation project, we saved $1 million in middleware licensing fees by rewriting the data processing and publishing logic using open source Node.js technology. Transitioning from traditional data processing with relational databases to a distributed framework using Hadoop, Apache Spark, Scala, Node.js, and Kafka greatly improved speed and reduced costs.

In another example, a machine learning pipeline built for predictive maintenance helped reduce equipment downtime and improve overall efficiency, showing how proactive, data driven strategies can optimise business processes. The automated data quality framework I developed ensured 100% accuracy in handling billions of customer records, highlighting the power of automation in maintaining data integrity and scaling operations.

As technology rapidly evolves, it is crucial to regularly assess current systems to ensure they remain aligned with modern advancements. Staying current with technology prevents organisations from facing several challenges.

Looking forward, I believe technology transformation will be driven by the continued integration of AI, ML, and Big Data analytics into all areas of business. We will see the rise of predictive analytics and Internet of Things (IoT) as organisations focus on real-time insights and decision-making.

Automation and AI driven intelligence will push industries towards more autonomous systems. Data engineering will play a key role in orchestrating these advancements, and as technologies mature, the emphasis will shift towards ethical AI, data privacy, and ensuring the accountability of AI-driven decisions.

RTIH: You have written that you enjoy mentoring data engineers and promoting data-driven organisational cultures. As a technology leader, how important is training young engineers, and promoting collaboration and an understanding of business objectives?

SP: Mentoring young engineers ethically and effectively is crucial in today’s rapidly evolving world. Structured training and fostering collaboration are vital to building a strong technical team. As a technology leader, I recognise that mentoring goes beyond teaching technical skills, it helps shape engineers' mindsets to think beyond just coding and algorithms.

By cultivating a data driven organisational culture, we can empower engineers to align their problem-solving with broader business objectives, giving their work greater purpose. At the same time, it is essential that they grasp the importance of data privacy, security, and governance frameworks.

Nurturing curiosity and providing the tools to navigate the ever changing tech landscape is key. Encouraging collaboration brings diverse perspectives together, resulting in more robust and scalable solutions.

Furthermore, fostering accountability and ownership ensures engineers stay aligned with business goals, driving success. By focusing on developing the next generation of engineers and fostering a culture of collaboration, organisations can remain innovative and adaptable to future technological advancements.

Continue reading…