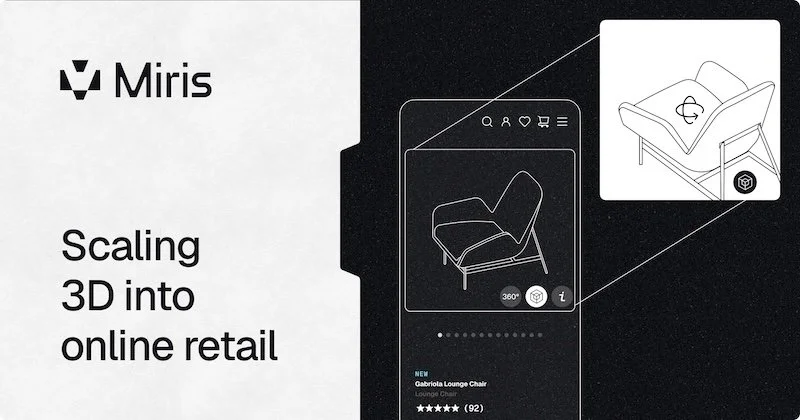

Scaling 3D into online retail: technical constraints a barrier to telling better product stories

3D product visualisation delivers measurable results. When dog kennel brand Gunner Kennels added AR to their product pages, they saw a 40% increase in order conversion and a 5% reduction in returns. Fashion retailer Rebecca Minkoff found customers 65% more likely to purchase after interacting with products in AR. Polish furniture maker Oakywood drove a 250% increase in sales of key products after implementing 3D visualisation.

This represents meaningful revenue growth. Yet despite years of proven ROI, 3D remains a boutique solution. The answer for this puzzle isn't lack of demand or insufficient hardware. It's that the current technical delivery model of download-first doesn't tell compelling product stories at scale.

Consumers are either stuck waiting for high-fidelity assets to load, or served cheap, generic 3D that fails to communicate. And because of this, we do not take risks to build anything more compelling than a simple product viewer.

The real problem: today’s constrained experiences

Right now, if you are implementing 3D product visualisations, it’s under heavy technical constraints that dictate the quality of visual stories. Customers expect photorealistic representations. A couch should show the intricate texture of the fabric. Sneakers should reveal every stitch and material variation. But it is incredibly challenging to deliver that experience economically.

It starts with the desire for high-fidelity 3D assets, which require large file sizes, and large files take time to download. Every second of wait time kills conversion. At more than five seconds, we are beginning to lose customers. Thirty seconds is unacceptable. Most shopping happens on mobile devices with limited processing power and memory, making the problem exponentially worse.

So we optimise. We hire specialised agencies to compress our assets, a process that means reducing polygon counts and texture resolution, simplifying materials, and building with tiling generic detail instead of product-specific fidelity. We shrink a 100MB file down to 5-10MB to make it load fast enough. For automotive configurators working with CAD files, we’re looking at gigabytes of data that need similar treatment.

The unfortunate result is 3D that looks cheap compared to the physical products we’re selling. Assets optimized for speed and performance often end up less effective than the 2D images and video they're meant to improve. Customers can't evaluate products with confidence because the representations don't communicate material quality or construction detail. As a result, we don’t put energy or effort in the 3D asset experience.

This isn't just a visual problem. According to a 2025 SCAYLE survey of 23,000 consumers across 30 countries, 57% of US shoppers prefer hybrid shopping that mixes online research with in-store purchases.

The data shows why: 59% of consumers still visit physical stores specifically to see and touch products they've already researched online. They're not nostalgic for bricks and mortar retail. They're responding to a practical gap. Flat 2D images can't communicate texture, scale, or material quality. Cheap looking 3D doesn't solve that problem.

The business case for solving this is clear. Retailers who successfully implement high-fidelity 3D visualisation see conversion rates increase by up to 40% and return rates drop by 30-40%, according to industry data from BigCommerce and Threekit.

When customers can rotate products 360 degrees, zoom into material details, and see items in context, they buy with confidence. The technical constraint from delivering this experience at scale is what adaptive spatial streaming solves.

This constraint keeps us from telling effective product stories in an increasingly loud commercial landscape. We’re forced to choose between quality and performance, between fidelity and economics. That's the delivery problem streaming architecture addresses.

A visual world with no limits

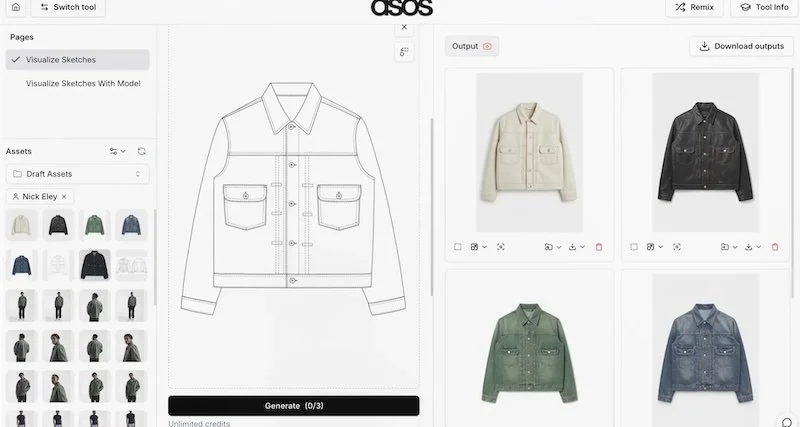

Let’s assume for a moment that we could remove the delivery constraint entirely. Start with photogrammetry or high-fidelity scans of real products without thinking about polygon budgets.

We place products in the optimal rich, branded environments that tell the stories we want to convey: a photorealistic sofa styled in a life-like living room rather than floating in a white void, fashion shown in a real-world context rather than on sterile backdrop.

If we no longer have to worry about manual optimisation of your assets, we can focus on creating the highest fidelity content possible. When a product changes or a new colorway launches, the asset is updated. The creative focus shifts from navigating technical constraints to designing customer experiences.

For end customers, there should be no tradeoff between quality and performance. They see high-fidelity representations in milliseconds that refine as they interact. Whether they’re using a new flagship iPhone or a budget Android tablet, the same asset would automatically adapt without your intervention. Customers would experience no wait time when switching products, so they can browse your entire catalog as fluidly as scrolling through images.

That's the state that drives the conversion lifts in those case studies. The question is how to make this technically and economically feasible.

Traditional 3D delivery methods have issues with scale

Asset creation is no longer the bottleneck. Tools for photogrammetry, 3D scanning, and procedural generation have matured significantly. And as more and more data is created, this compounds the real constraint, which is delivery infrastructure and the optimisation grind it forces.

Traditional 3D delivery follows a download-first model. Users click on a product. Browser downloads the entire 3D file. Device renders it locally. Only then can the user interact. This creates two compounding problems.

Upstream: Creating Assets You Can't Actually Deliver

Photorealistic assets require high resolution textures (often 4K or higher), dense polygon meshes to capture geometric detail, complex material shaders for realistic surfaces, and accurate lighting information.

For automotive configurators working with CAD files, we’re talking gigabytes of data when converted to meshes. Even optimised furniture or fashion products easily exceed 50-100MB per SKU before you start cutting them down.

For a retailer with thousands of SKUs, storage and management costs alone become prohibitive. But those assets still need to reach customers through web infrastructure designed for smaller file sizes.

Downstream: The Optimisation Grind

Specialised teams are forced to perform controlled demolition on 3D assets to shrink a >100MB file (or multi-gigabyte CAD conversion) down to something that can load in a reasonable timeframe, usually 5-10MB, while keeping it visually acceptable. That means:

● Reducing polygon counts without losing shape

● Compressing textures without obvious artifacts

● Building with tiling generic detail instead of specific detail for the product

● Simplifying materials while maintaining some sense of realism

● Optimising for the weakest device that might encounter the asset

This is time-consuming and expensive work requiring skilled 3D artists since each asset needs individual attention. There might even be tools to help speed the process. But all of it is a compromise after the creation, making the process fragile. If the source product changes, optimisation starts over again.

The economic reality is that this level of 3D tuning only makes sense for high margin, high revenue products, forcing 3D to be applied selectively to a few hero SKUs, not as a baseline experience across the entire catalog.

And here's the deeper problem: this workflow pulls our focus away from what actually drives conversion. Instead of thinking about how to present products in contextually rich scenes that communicate brand values, we are focused on technical constraints; Can we reduce this texture to tileable 2K resolution? How many polygons can we cut from this mesh and still find it acceptable? Will this material shader work on a five-year-old Android phone?

The constraints of delivering assets end up dictating how we build the creative, rather than the creative driving the experience.That's backwards.

A streaming architecture changes the entire process

Instead of forcing a download then render approach, Miris streams 3D spatial data (geometry, appearance, and material properties) as a continuous, adaptive process. Think of it like the shift that adaptive streaming brought to video a decade ago.

Before adaptive streaming, watching video online meant downloading an entire file at fixed quality before playback could begin. The shift to streaming meant video could start playing immediately at reduced fidelity, then progressively enhance as more data arrived, adapting in real-time to bandwidth and device capabilities.

Miris applies a similar model to navigable 3D space. Devices receive spatial data that renders immediately and refines progressively, adapting to network conditions, hardware capabilities, and viewing focus. We see an initial representation in milliseconds that continuously sharpens as more data streams in, prioritising the detail that matters most for what is being viewed at any given moment.

This architectural shift enables three critical capabilities for configurator builders:

1. Progressive refinement without downloads

Gaussian splats are naturally compatible with progressive enhancement. Initial samples arrive quickly, providing immediate interactivity at reduced fidelity. As additional data streams in, the client progressively refines the representation. This matches the consumption pattern of unreliable consumer networks far better than mesh-based approaches, which typically require complete topology transmission before any rendering can occur.

2. Adaptive fidelity based on context

The streaming protocol adapts the underlying Gaussian splat representation in real-time based on network conditions (bandwidth, jitter, packet loss), client device capabilities (GPU, memory, compute budget), content characteristics (geometric complexity, motion dynamics), and user interaction patterns (camera direction, navigation speed). The system automatically optimises what data to send and how to send it, moment-by-moment, to keep experiences fluid.

3. Serverless, hardware-independent distribution

Because Miris does not transmit rendered pixels, the architecture operates without cloud GPU or CPU server dependencies when distributing content to users. Miris has heavy GPU utilization upfront to condition assets for streaming.

This eliminates infrastructure capacity constraints entirely. The economic model shifts from hardware-constrained (expensive, fixed capacity) to bandwidth-constrained (scales with actual usage). Costs scale linearly with usage, not exponentially with concurrent users. As usage grows, volume-based pricing tiers reduce your per-unit compute costs automatically.

What this means for implementation

When the delivery constraint disappears, our workflows change fundamentally.

Economics That Actually Scale

Traditional 3D implementation requires modeling or scanning each SKU at costs ranging from $500 to $2,000, then optimization for web delivery adding another few hundred dollars, plus testing across devices. All-in, prices range from $1,000 to $3,200 per product. For a mid-size retailer with a thousand SKUs, that's a multi-million dollar investment before even serving a single 3D model to a customer.

Streaming-based implementation inverts this model. Instead of heavy upfront costs per SKU, it works with a one time platform integration. As AI driven 3D generation from photography improves (converting 2D product photos to 3D representations), the marginal cost of adding another product rapidly decreases. There is also no manual optimisation required because the streaming platform handles device adaptation automatically.

A retailer doing $50 million in online revenue with a 20% conversion increase (well below what the case studies show) generates $10 million in additional annual revenue. Pair that with even a modest 3% reduction in returns ($1.5 million in saved costs) and you're looking at an $11.5 million annual impact.

Moving from Selective to Universal Implementation

Instead of handpicking 20-50 hero products for 3D treatment, it is now possible to convert thousands of SKUs across every price point. Seasonal collections launch with full 3D on day one rather than catching up months later. Every colorway, every variation gets treated equally because the per-unit cost has collapsed.

The behavioral shift matters most. When customers can thoroughly evaluate products before purchase, confidence goes up and returns go down. The "not what I expected" problem shrinks. And when someone can confidently visualise multiple products together in their space, cross-sells and upsells start working the way they're supposed to.

Ready to explore what streaming enabled 3D could mean for your catalogue? Connect with our team to see how catalogue scale 3D visualisation becomes economically viable today.

Continue reading…