How do enterprises in the UK implement MLOps successfully?

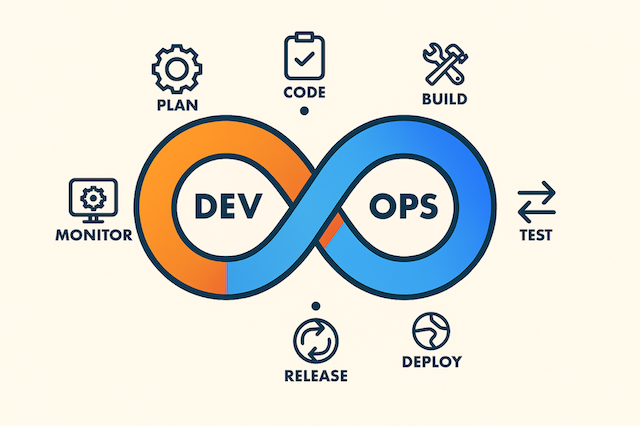

MLOps, or Machine Learning Operations, is the practice of applying DevOps principles to machine learning workflows. It encompasses the processes, tools, and best practices needed to efficiently develop, deploy, and maintain ML models in production.

By bridging the gap between data science, engineering, and operations, MLOps ensures that ML initiatives are scalable, reliable, and aligned with business objectives.

Why MLOps Matters for Enterprises Today

As enterprises increasingly rely on AI to gain a competitive edge, MLOps has become essential. It enables organisations to accelerate time-to-market for ML solutions, maintain model performance, ensure regulatory compliance, and reduce operational risks. Without proper MLOps practices, enterprises risk deploying models that are inaccurate, non-compliant, or difficult to maintain.

The UK has witnessed rapid adoption of AI and machine learning across industries such as finance, healthcare, retail, and manufacturing. Enterprise AI companies in the UK are leveraging MLOps to manage growing ML portfolios, streamline operations, and meet strict regulatory requirements.

According to recent surveys, a majority of UK enterprises now view MLOps not as an optional framework but as a strategic necessity for AI adoption.

Foundations of Successful MLOps Implementation

1.Building Cross-Functional Teams

Successful MLOps relies on collaboration between data scientists, ML engineers, DevOps teams, and business stakeholders. Cross-functional teams ensure that ML models are not only technically sound but also aligned with business objectives. Clear communication between teams accelerates experimentation, reduces bottlenecks, and supports continuous model improvement.

2. Setting Clear Governance, Compliance, and Ethical AI Frameworks

Enterprises must establish governance frameworks to ensure AI initiatives meet regulatory and ethical standards. This includes data privacy compliance, model auditing, bias mitigation, and transparent reporting mechanisms. Robust governance ensures that ML deployments are responsible and sustainable over time.

3. Ensuring Data Quality and Robust Infrastructure

High quality data is the backbone of any ML system. Enterprises need reliable pipelines for data ingestion, preprocessing, and storage, along with infrastructure that supports scalable experimentation and production. Cloud-based, on-premise, or hybrid solutions provide the flexibility required to manage large-scale datasets and model workloads effectively.

Core Components of MLOps in Practice

Model Development, Versioning, and Reproducibility

Version control for datasets, code, and models ensures reproducibility and traceability. Enterprises adopt tools that allow them to track changes, roll back updates, and replicate experiments seamlessly, ensuring consistent model performance across production environments.

CI/CD Pipelines for ML Workflows

Continuous Integration and Continuous Deployment (CI/CD) pipelines automate the testing, validation, and deployment of ML models. These pipelines reduce human error, accelerate iteration cycles, and enable reliable model rollouts in dynamic business environments.

Monitoring and Observability

Once deployed, ML models require continuous monitoring for performance, drift, and compliance. Observability tools track metrics such as accuracy, fairness, latency, and resource utilization, alerting teams to potential issues before they impact business outcomes.

Scalability and Cloud/On-Premise Hybrid Setups

UK enterprises often leverage hybrid infrastructure, combining cloud flexibility with on-premise security. Scalable setups ensure that ML workloads can adapt to changing demand, support high-traffic applications, and manage compute intensive operations efficiently.

Leveraging Machine Learning Operations for Enterprise AI Solutions

Machine learning operations (MLOps) provides the core capabilities enterprises need to turn data science insights into scalable AI solutions. By structuring ML pipelines and using a centralized model registry, teams can efficiently manage production models, track versions, and coordinate new experiment cycles.

A robust MLOps framework ensures that data preparation and feature engineering are standardised, enabling reproducible model training and deployment. Once models are deployed through a deployed pipeline, active performance monitoring helps detect drift, maintain accuracy, and trigger processes to retrain models when necessary.

Enterprises also leverage a modern technology stack to support diverse use cases, including computer vision, natural language processing, and predictive analytics. By combining these capabilities, organisations can streamline experimentation, accelerate new experiment cycles, and maintain reliable, scalable production models that drive business value.

Example: TT PSC

Transition Technologies PSC (TT PSC), a leading enterprise AI company in the UK, has successfully implemented MLOps to streamline its machine learning lifecycle. By building a centralised MLOps platform, TT PSC has enabled cross-team collaboration, automated model testing, and active performance monitoring.

This structured approach has significantly reduced time-to-deployment, improved model accuracy, and ensured compliance, demonstrating how robust machine learning operations can generate tangible business value.

Website: ttpsc.com

Case Studies: TT PSC

1. Legacy Software Modernisation for a Global Cybersecurity Leader

Challenge: A global leader in cybersecurity needed to modernise its flagship Privileged Access Management (PAM) system, which had not been updated in over seven years.

Solution: TT PSC partnered with the company to replace the outdated, unstable automation framework with a scalable, Python-based solution. The new system was integrated with CI/CD pipelines, fully remote, and ready for future operating system releases.

Outcome: The modernisation led to faster releases, full regression coverage, and a cultural shift towards automation-first development.

Read the full success story here.

2. Revolutionising Semiconductor Manufacturing: Optimising Filtration with ML and IoT

Challenge: An Asian chemical company operating in the semiconductor manufacturing sector aimed to enhance their production quality assurance processes by implementing advanced data analytics and anomaly detection solutions.

Solution: TT PSC's goal was to ensure higher consistency in product quality and minimise manufacturing errors by leveraging machine learning (ML) models and advanced IoT-based data analytics.

Outcome: The implementation led to improved product quality and reduced manufacturing errors, demonstrating the effective application of ML and IoT in industrial settings.

Read the full success story here.

How to implement MLOps successfully?

For UK enterprises, successful MLOps implementation goes beyond tools - it requires strategic alignment across teams, robust governance, and a focus on data quality and infrastructure. Cross-functional collaboration between data scientists, machine learning engineers, and software engineers ensures that every stage of the machine learning lifecycle - from exploratory data analysis and feature engineering to model training and deployment - is efficient and reproducible.

Structured pipeline continuous delivery enables automated continuous delivery of machine learning projects, allowing trained and validated models to be promoted from the development or experiment environment to a deployed model prediction service with minimal manual transition.

This process separates the work of data scientists performing data analysis and model development from operational responsibilities, while maintaining robust model monitoring for new data, test data, and ongoing performance drift.

Enterprises leverage proprietary data, data processing, and ML algorithms to build reliable AI solutions, including generative AI, at scale. Modern MLOps platforms, often built on cloud infrastructure such as Google Cloud, ensure scalable model workflows and support reproducible experiment cycles, allowing teams to iterate on experiments efficiently while maintaining high quality machine learning models.

By following these practices, UK enterprises can deliver business value faster, maintain compliance, and ensure that their AI initiatives remain reliable and scalable across the organisation.

Continue reading…